Have you ever faced the problem of handling a complicated configuration in an Enterprise environment where there are dozens of components interacting (databases, web services, FTP sites, queues, etc)?

Mix this with automated deployment and some deployment environments (integration, QA, staging, production) and you have a complex problem to solve. A wrong solution could undermine your whole architecture, making deployment chaotic and untestable.

We've faced this situation and we've come up with what I believe is a nice solution. Let's state our requirements, which is always a good exercise when solving a problem:

R1- Automatic deployment

We need our solution not to interfere with automatic deployment.

To me, this means to keep code and configuration separated. I don't want a super-complex deployment script which must handle deployment in every environment. I also don't want to include all my environment's passwords in this deployment script.

R2- Ease of use and maintenance for system administrators

Our solution must be easy to use and maintain for system administrators. It must be very easy for administrators to create and maintain configuration for each environment and to track changes done to the configuration.

R3- Robustness

I want all the applications to share the same configuration, specially in our environment, where several applications interact with the same databases/web services/etc. It must be impossible for application A to point to a database

Db_staging while application B points to

Db_integration. They must always point to the same database.

R4- Flexibility

At least in our case, the staging/QA/integration environments are far from being identical to our production environment, mainly due to infrastructure expenses: we have 10+ servers in production and we only have 2 servers per environment. We can not afford to have 10+ servers per environment.

Besides, you never know what a developer will need in order to test the application in their boxes.

So, our solution must allow a great deal of flexibility, assuming nothing about the different environments.

R5- Ease of use for developers

It must be very easy for developers to get the configuration values and to tamper with them in their development environments.

R6- Security

Finally, for us, it was very important to have one single database user per application. But this could be a very particular requirement.

The (simplified) solution

S1- Global configuration file: create a file (or files) that contain all your configuration resources (connection strings to databases, ftp connection details, urls to web services, etc).

We choose an XML file with key/values:

<add name="MyDB1" value="Data Source=stagingSQL;Initial Catalog=MyDB;User ID=user;Password=pass"/>

S2- Create a copy of this file per environment, and put it under version control so that you can check for modifications.

S3- Create a project in your CI server that deploys this configuration file(s) to the right servers when a change is detected.

So far we have handled requirements:

R1- We have automatic deployment of the configuration because it is a simple plain-text file under version control that is automatically deployed by our CI server (or any other mechanism you prefer).

R2- System administrators only have to commit changes to the configuration files in the right branch in order to modify an envinroment's configuration.

R3- There isn't a configuration file per application but a single "source of truth": the global.config file. There is no way you can get two different values for the same key.

R4- There are no limits to what you can place in a text file. The only requirement is that the keys are the same for all the files, otherwise the applications won't find the resources they are asking for. That should be flexible enough and some naming conventions can make it simple.

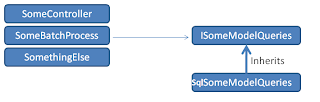

R5- Build an API to access the configuration values. Make it very transparent to users. Our client calls look like this:

Environment.Current.GetDbConnectionString("MyKey"), which is very similar to AppSettings.ConnectionStrings["MyKey"].

R6- Using a very simple template for the values you can make your API to automatically handle this kind of security:

- Have a users.config file where you have ApplicationIds -> User/Password.

- Make your API request the running application to provide its ApplicationId before the application can access any configuration values: Environment.Initialize("MyAppId"); Any call to Environment.Current should fail if no ApplicationId has been provided.

- Make your connection strings look like this:

<add name="MyDB1" value="Data Source=stagingSQL;Initial Catalog=MyDB;User ID={user};Password={password}"/>

- Finally, make your GetDbConnectionString load the ConnectionString, the ApplicationId -> User/Password values, and replace the placeholders with the actual values.

We have found this approach to be a very nice solution. Hope it helps you find your own, or you can use this one as it is.